Introduction

In today’s AI-driven world, having a personal AI assistant that understands your specific domain knowledge can be incredibly powerful. Whether you’re a professional seeking to streamline your workflow, a student organizing research, or an enthusiast wanting to explore specific topics in depth, training a local AI model with your own information creates a customized knowledge companion tailored to your needs.

This guide walks you through the process of training your local AI system with specialized information, allowing you to create a personalized knowledge base that you can query anytime.

Why Train Your Local AI?

- Personalized Knowledge Access: Instantly retrieve information from your documents, research, and specialized content

- Enhanced Productivity: Save hours of searching through documents by asking your AI directly

- Privacy Control: Keep sensitive information local without relying on cloud-based solutions

- Continuous Learning: Add new information as you acquire it, building an ever-expanding knowledge base

Prerequisites

Before beginning the training process, ensure you have:

- A compatible local AI system (options detailed below)

- Well-organized source materials in digital format

- Basic understanding of file organization and data preparation

- Sufficient storage space and computing resources

Choosing the Right Local AI Solution

Several local AI options exist, each with different requirements and capabilities:

Option 1: Local Large Language Models (LLMs)

Local LLMs like LLaMA, Orca, or Mistral can run directly on your machine without an internet connection. These require:

- Moderate to high-end GPU (8GB+ VRAM recommended)

- 16GB+ system RAM

- 20GB+ storage space for model files

Option 2: Retrieval-Augmented Generation (RAG) Systems

RAG systems combine a language model with a vector database of your documents:

- More efficient for large document collections

- Can run on lower-end hardware

- Focuses on retrieving and synthesizing from your specific content

Option 3: Fine-tuned Smaller Models

For specialized applications with limited scope:

- Lower hardware requirements

- Faster inference times

- More focused on specific domains

Data Preparation

The quality of your AI’s responses depends significantly on how well you prepare your training data.

1. Document Collection

Gather all relevant materials:

- Technical documentation

- Research papers

- Personal notes

- Presentations

- Books and articles

- Video transcripts

2. Data Cleaning

- Remove duplicate content

- Fix formatting issues

- Ensure consistent terminology

- Break down large documents into manageable chunks

- Convert everything to plain text or markdown format

3. Organization

Create a logical structure for your knowledge base:

- Group related documents in directories

- Use consistent naming conventions

- Create metadata files describing content when helpful

Training Process

For RAG Systems (Recommended for Beginners)

- Install a Local RAG Framework

- Options include LlamaIndex, Langchain, or ChromaDB with a local LLM

- Index Your Documents

import llama_index # Create document loader documents = SimpleDirectoryReader('your_knowledge_directory').load_data() # Create vector store index index = VectorStoreIndex.from_documents(documents) # Save index for future use index.storage_context.persist('knowledge_index' - Set Up Query Interface

# Load existing index index = load_index_from_storage(StorageContext.from_defaults(persist_dir=’knowledge_index’)) # Create query engine query_engine = index.as_query_engine() # Query your knowledge base response = query_engine.query(“What are the key principles of [your topic]?”) print(response)

For Fine-tuning a Local LLM

- Prepare Training Data in Instruction Format

- Create instruction-response pairs from your content

- Format according to your chosen model’s requirements

- Set Up Training Environment git clone https://github.com/relevant-llm-training-repo cd llm-training pip install -r requirements.txt

- Configure Training Parameters Create a config file with appropriate learning rate, batch size, and training steps

- Run Fine-tuning Process python train.py –model_name “base_model” –data_path “your_prepared_data.json” –output_dir “your_custom_model” –config “training_config.yaml”

- Test Your Model from transformers import AutoModelForCausalLM, AutoTokenizer model = AutoModelForCausalLM.from_pretrained(“your_custom_model”) tokenizer = AutoTokenizer.from_pretrained(“your_custom_model”) response = model.generate(tokenizer.encode(“Tell me about [your topic]”, return_tensors=”pt”)) print(tokenizer.decode(response[0]))

Creating a User-Friendly Interface

Once your AI is trained, create an accessible interface:

Command-Line Interface

def main():

while True:

query = input("Ask your AI: ")

if query.lower() == "exit":

break

response = query_engine.query(query)

print(f"\nAI Response: {response}\n")

if __name__ == "__main__":

main()Simple Web Interface with Gradio

import gradio as gr

def respond(question):

response = query_engine.query(question)

return str(response)

interface = gr.Interface(

fn=respond,

inputs=gr.Textbox(lines=2, placeholder="Ask about your knowledge base..."),

outputs="text"

)

interface.launch(share=True)Practical Applications

Your trained AI can now help with various tasks:

Creating Educational Materials

- Generate study guides from your notes

- Summarize complex topics

- Create practice questions and quizzes

Documentation Generation

- Automatically produce technical documentation

- Create user manuals from product specifications

- Generate API documentation from code comments

Presentation Creation

- Draft presentation outlines based on your content

- Generate supporting slides with key points

- Prepare speaking notes aligned with your expertise

Knowledge Synthesis

- Connect ideas across different documents

- Identify patterns in your research

- Generate insights from disparate sources

Maintenance and Improvement

To keep your AI knowledge base relevant:

- Regular Updates

- Add new information as you acquire it

- Schedule periodic reindexing of your knowledge base

- Performance Tracking

- Log questions your AI struggles with

- Note areas where responses could be improved

- Iterative Refinement

- Add clarifying documents for weak areas

- Reorganize content for better retrieval when needed

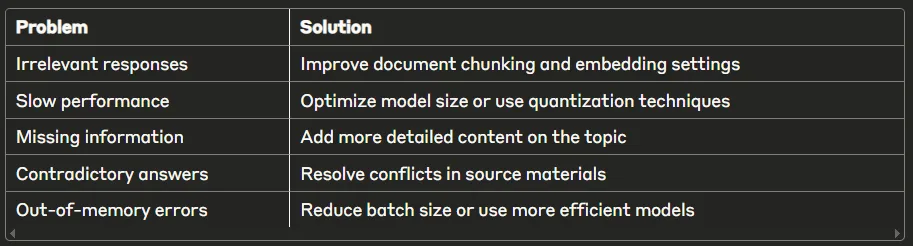

Troubleshooting Common Issues

Conclusion

Training your local AI with personalized knowledge transforms it from a general-purpose tool to a specialized assistant deeply familiar with your domain expertise. By following this guide, you’ve created a powerful knowledge companion that can help you generate content, answer questions, and synthesize information from your specialized knowledge base.

As you continue to add information and refine your system, your AI will become an increasingly valuable resource for learning, teaching, and content creation.

Resources for Further Learning

- LlamaIndex Documentation

- Hugging Face’s Guide to Fine-tuning

- RAG: Beyond Basic Retrieval

- Vector Databases Explained

Have fun!

Greetings,

Team Nexus