✨ Introduction

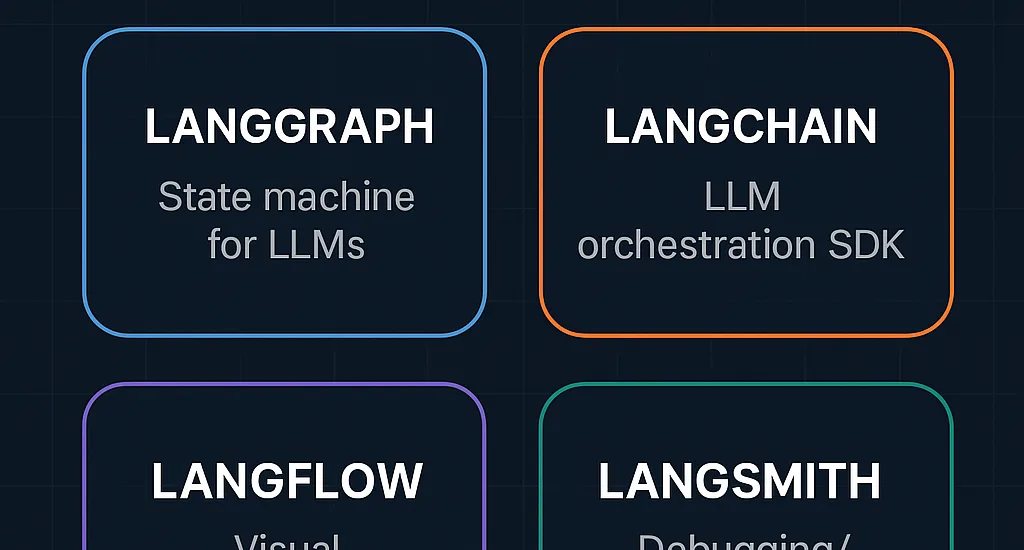

With the rise of AI-powered applications and intelligent agents, developers are seeking efficient ways to build, manage, and optimize large language model (LLM) workflows. Tools like LangGraph, LangChain, LangFlow, and LangSmith are shaping the future of LLM development. In this article, we provide a comprehensive comparison to help you decide which tool is right for your project.

📃 Quick Overview

| Tool | Type | Best Use Case | Hosting |

|---|---|---|---|

| LangGraph | State machine for LLMs | Complex, branching workflows | Local/Cloud |

| LangChain | LLM orchestration SDK | Building AI agents, apps | Local/Cloud |

| LangFlow | Visual LangChain editor | Low-code/no-code AI tool creation | Web/Local |

| LangSmith | Debugging/Observability | Tracing, testing, fine-tuning agents | Cloud-based |

🧵 Tool Deep Dive

LangGraph 🧐

- Built on top of LangChain

- Enables branching logic and agent memory

- Inspired by state machines and graphs

- Ideal for async workflows

- Best for: Structured, resilient AI agent workflows

LangChain ⚙️

- Modular SDK for building LLM apps

- Includes chains, tools, agents, memory systems

- Wide community and documentation support

- Best for: Developers building scalable AI pipelines

LangFlow 🧩

- Visual UI for LangChain components

- Great for prototyping and collaborative design

- Drag-and-drop editor

- Best for: Rapid experimentation without writing code

LangSmith 🛠

- Observability and evaluation for LLM chains

- Allows tracing, error analysis, and dataset testing

- Great for debugging, improving model behavior

- Best for: Monitoring, QA, and production debugging

🌐 Comparison Table

| Feature / Tool | LangGraph | LangChain | LangFlow | LangSmith |

| Type | State Machine | SDK | Visual UI | Debugging/QA |

| Best for | Branching workflows | Building apps | Rapid prototyping | Tracing/Testing |

| Hosted? | Local/Cloud | Local/Cloud | Local/Web | Cloud |

| No-code Support | ❌ | ❌ | ✅ | ❌ |

| Observability | Basic | Basic | ❌ | ✅ ✅ ✅ |

| Custom Tooling | ✅ | ✅ | ↻ Limited | ❌ |

📊 When to Use Which One?

- LangGraph: Need robust branching logic, memory, and async behavior? Go with this.

- LangChain: Want full control to build and compose modular LLM components? Use this as your base.

- LangFlow: Working with teams or clients who need to visually build or understand the workflow? Start here.

- LangSmith: Already built something and want to analyze performance or fix errors? This is your go-to tool.

📅 Real-World Use Cases

- LangGraph: Building a support chatbot that switches intent paths and manages memory

- LangChain: Creating an LLM app that takes user input, searches documents, and summarizes results

- LangFlow: Prototyping a mental health AI assistant with minimal code

- LangSmith: Debugging incorrect outputs from AI agents used in customer service

📈 Pros & Cons

LangGraph

- ✅ Robust async flows

- ✅ Graph-based structure fits branching logic

- ❌ Slightly steeper learning curve

LangChain

- ✅ Full modularity

- ✅ Active ecosystem

- ❌ Can get complex in large-scale apps

LangFlow

- ✅ Visual editing

- ✅ Fast prototyping

- ❌ Limited advanced customization

LangSmith

- ✅ Top-tier debugging and tracing

- ✅ Dataset and evaluation tools

- ❌ Cloud-only, less useful in offline settings

🚀 Conclusion

LangGraph, LangChain, LangFlow, and LangSmith each solve a unique challenge in the LLM workflow space. Whether you’re building from scratch, prototyping fast, or debugging production workflows, the right choice depends on your goals.

“For structured workflows, go with LangGraph. For flexibility and scale, use LangChain. For easy UI, try LangFlow. And for observability and QA, LangSmith is your best friend.”

Let us know which tool you use and why in the comments!