Excerpt / Summary

Running large language models locally on your own CPU/GPU has become a practical alternative to cloud “premium” models—especially for teams that need privacy, offline operation, full control over prompts and system behavior, and the ability to customize outputs. This technical guide covers 10 of the best local LLM runtimes and stacks (CLI, GUI, server, and developer frameworks), how to source models, what “unlocked” really means in practice, and how to validate model behavior responsibly. It also includes hardware sizing, quantization formats (GGUF, GPTQ, AWQ), and real deployment patterns like OpenAI-compatible APIs and containerized inference.

Introduction: What This List Covers (and Why It’s Useful)

Local inference has matured rapidly: today you can run capable LLMs on a laptop CPU, a single consumer GPU, or a small home server, often with an OpenAI-compatible API endpoint for apps and agents. Compared to cloud premium models, local stacks typically offer:

- Privacy by default: prompts and outputs stay on your machine.

- Offline / air-gapped operation: suitable for restricted networks.

- No quotas or rate limits: throughput is limited only by hardware.

- Customization: you can select base vs instruct vs roleplay-tuned models, choose quantization levels, set context sizes, and even fine-tune.

- More controllable behavior: you can run models with fewer built-in refusals and add your own safety controls instead of relying on a provider’s policy layer.

Important note on “unlocked/uncensored”: People use these words loosely. In practice it usually means the model has been fine-tuned (or configured) to refuse fewer prompts, or it lacks a strong safety-alignment layer. That does not guarantee accuracy, and it does not mean you should use it for wrongdoing. In this article, examples focus on legitimate use cases (e.g., security education, malware analysis in sandboxes, red-team simulations, policy testing, and research) and on how to implement your own governance controls locally.

This list is ordered from most approachable to most configurable, and each item includes: what it is, why it’s included, and key features/benefits. Where helpful, image descriptions are included to support publishing.

Item 1: Ollama (Fastest Path to Local Models + Simple Model Management)

Description and details

Ollama is a developer-friendly local model runner that prioritizes a clean UX: pull models, run them, expose an API, and integrate into apps quickly. It works well for local chat, agent prototypes, and building services that need an OpenAI-like interface without the complexity of full MLOps.

Typical workflow:

- Install Ollama on macOS/Linux/Windows

ollama pull <model>andollama run <model>- Use the local HTTP API for integrations

Image description: A terminal screenshot showing ollama pull and ollama run with token streaming output and GPU utilization in a separate system monitor panel.

Why it’s included

Ollama reduces friction: it’s one of the most reliable “it just works” options for running quantized models locally with minimal setup. For many teams, it’s the fastest route from “idea” to “working local LLM endpoint.”

Key features or benefits

- Quick model pulls with a consistent UX for starting/stopping models.

- OpenAI-style local API patterns (good for drop-in app integration).

- Great for iterative prompting and local evaluation loops.

Resource: https://ollama.com

Item 2: LM Studio (Best Local GUI for Testing Models and Comparing Quantizations)

Description and details

LM Studio is a desktop GUI for discovering, downloading, and running local models—especially GGUF builds. It’s well suited for technical users who want to experiment with different models, quantization levels, context sizes, and sampling parameters without living in the terminal.

LM Studio typically supports:

- One-click model download

- Chat sessions and prompt templates

- Local server mode for app integration

- Basic performance insights (tokens/sec, context usage)

Image description: A desktop UI showing a left sidebar of downloaded GGUF models (e.g., 8B/13B variants), a center chat window, and a right panel with temperature/top-p/max tokens and context settings.

Why it’s included

GUI tooling matters for productivity: LM Studio makes it easy to validate whether a model fits your task (coding, analysis, roleplay, summarization) and to compare “unlocked” fine-tunes against more conservative instruct variants.

Key features or benefits

- Model discovery and management optimized for local workflows.

- Parameter tuning without editing config files.

- Server mode to turn desktop inference into a local endpoint.

Resource: https://lmstudio.ai

Item 3: GPT4All (Offline-First Local Chat + Easy CPU Operation)

Description and details

GPT4All is an offline-oriented local LLM ecosystem with a desktop app and an emphasis on CPU-friendly models. It’s a solid choice when you need “good enough” local inference on machines without strong GPUs, or you want a self-contained offline assistant for documentation, knowledge work, and experimentation.

It commonly provides:

- Simple model downloads via UI

- Local chat and prompt history

- Support for multiple model families (depending on releases)

Image description: A minimal chat UI running on a laptop with no discrete GPU, showing steady token streaming and a small “offline” indicator.

Why it’s included

Many “local LLM” articles over-index on GPU rigs. GPT4All is a practical reminder that useful local inference can still happen on CPU—especially with smaller models and careful quantization.

Key features or benefits

- CPU-friendly default experience.

- Offline-by-design for private environments.

- Low setup overhead for non-ML users.

Resource: https://gpt4all.io

Item 4: llama.cpp (Core Engine for GGUF Inference on CPU/GPU)

Description and details

llama.cpp is one of the foundational projects enabling high-performance local inference—especially with GGUF quantized models. It supports CPU inference extremely well and can leverage GPU acceleration on supported backends. Many higher-level tools (including some GUIs) use llama.cpp under the hood.

From a technical standpoint, llama.cpp is where you go when you want:

- Maximum control over inference flags

- Benchmarking and reproducibility

- Fine-grained control of context size and KV cache behavior

Image description: A benchmark output screenshot showing prompt processing time, generation tokens/sec, and memory usage under different quantization levels (Q4 vs Q5 vs Q8).

Why it’s included

If you care about performance per watt, deterministic deployments, or building your own local inference service, llama.cpp is often the most direct path. It’s also the easiest place to understand what your model is really doing (no hidden layers).

Key features or benefits

- Excellent CPU performance with quantized weights.

- GGUF ecosystem (widely shared quantizations).

- Deep configurability for power users and researchers.

Resource: https://github.com/ggerganov/llama.cpp

Item 5: Text Generation WebUI (oobabooga) (Experimentation Hub for Multiple Backends)

Description and details

Text Generation WebUI (often called “oobabooga webui”) is a powerful sandbox for running local LLMs with different loaders and backends (GGUF via llama.cpp, GPTQ/AWQ, and more depending on setup). It’s popular for rapid testing, roleplay configurations, prompt templates, and extensions.

It’s particularly useful when you want:

- Switchable inference engines and quant formats

- Extensions (character cards, advanced sampling, UI tools)

- Reproducible experiment presets

Image description: A browser UI with tabs for Model, Parameters, Extensions, and Session; the Model tab shows selectable loaders (GGUF/GPTQ) and VRAM offload sliders.

Why it’s included

This is the “workbench” option: not the simplest, but one of the most flexible for testing multiple model types and behaviors. If you’re comparing an “unlocked” fine-tune vs a standard instruct model, WebUI makes it easy to A/B test prompts and sampling settings.

Key features or benefits

- Broad model format support across local ecosystems.

- Highly configurable decoding (temperature, top-p, repetition penalties).

- Extensions for experimentation workflows.

Resource: https://github.com/oobabooga/text-generation-webui

Item 6: LocalAI (OpenAI-Compatible Local Inference Server via Docker)

Description and details

LocalAI is designed to run local models behind an API that mimics popular cloud interfaces. It’s commonly deployed via Docker and can power local applications, internal tools, and self-hosted assistants while keeping the integration surface similar to OpenAI-style APIs.

Where LocalAI shines:

- Running as a service on a workstation or server

- Supporting multiple model formats (depending on configuration)

- Making local inference consumable by existing apps with minimal changes

Image description: A diagram showing a developer app calling an OpenAI-like endpoint at http://localhost:8080, routed to LocalAI, which then calls a local model runner (GGUF/llama.cpp) on GPU.

Why it’s included

LocalAI is a practical bridge between “local model enthusiasts” and “production-minded developers.” If your goal is to replace a cloud API with local inference for cost/privacy/control reasons, LocalAI is a strong candidate.

Key features or benefits

- OpenAI-compatible API surface for faster integration.

- Containerized deployment for repeatability.

- Good fit for self-hosted assistants and internal tooling.

Resource: https://github.com/mudler/LocalAI

Item 7: vLLM (High-Throughput GPU Serving for Multi-User Local Deployments)

Description and details

vLLM is a high-performance inference engine designed for throughput and efficient KV cache management, especially on GPUs. If you want to serve many concurrent users (or run agentic workloads that generate lots of tokens), vLLM often outperforms simpler runners.

Technical highlights include:

- Efficient batching and memory utilization

- Strong performance for larger models on capable GPUs

- Better server-like behavior for teams (vs single-user desktop tools)

Image description: A metrics dashboard showing requests per second, average latency, GPU memory usage, and token throughput under concurrent load testing.

Why it’s included

Unlocked local models aren’t just for a single user on a laptop. If you’re building an internal service (red-team lab assistant, code review bot, SOC helper) and need concurrency, vLLM is the “scale-up” path on local GPUs.

Key features or benefits

- High throughput for multi-user inference.

- Better GPU utilization under load.

- Production-friendly serving model compared to desktop GUIs.

Resource: https://github.com/vllm-project/vllm

Item 8: Hugging Face Transformers (Maximum Flexibility for Research and Custom Pipelines)

Description and details

Transformers is the standard Python framework for loading and running models from the Hugging Face ecosystem. It’s the right choice when you need full programmatic control: custom tokenization, logprobs, tool-calling experiments, RAG pipelines, fine-tuning, evaluation harnesses, and integration with PyTorch.

This option typically involves:

- Using

transformers+torch - Choosing a quantization approach (bitsandbytes, GPTQ/AWQ integrations, etc.)

- Deploying as a script, FastAPI service, or batch job

Image description: A code snippet image showing a Python pipeline loading a model, applying a quantization config, then generating tokens with streaming output in a notebook.

Why it’s included

If your goal is not only to run a model but to build a custom system around it—evaluation, governance filters, telemetry, retrieval, fine-tuning—Transformers is the most flexible foundation.

Key features or benefits

- Largest ecosystem of models, datasets, and tooling.

- Research-grade control over inference and training loops.

- Easy integration with RAG stacks and vector databases.

Resource: https://huggingface.co/docs/transformers

Item 9: KoboldCpp (Roleplay-Oriented Local GGUF Runner with Simple Setup)

Description and details

KoboldCpp is a convenient way to run GGUF models with a focus on interactive storytelling and roleplay UX patterns. While it’s frequently used for creative writing, the technical takeaway is that it offers a streamlined GGUF experience that can be easier than assembling a full stack.

Common uses:

- Interactive long-form generation

- Prompt formats optimized for character-driven conversations

- Accessible configuration compared to raw CLI tools

Image description: A web UI showing a “Story” mode with a context window, author’s notes, and generation controls tuned for long-form continuity.

Why it’s included

Many “unlocked” fine-tunes are popular because they are more permissive and better at roleplay continuity. KoboldCpp is a practical runner for testing those behaviors, especially with longer context configurations.

Key features or benefits

- Simple GGUF execution with a roleplay-friendly UX.

- Good for long-context experimentation (hardware permitting).

- Lower setup complexity than multi-backend frameworks.

Resource: https://github.com/LostRuins/koboldcpp

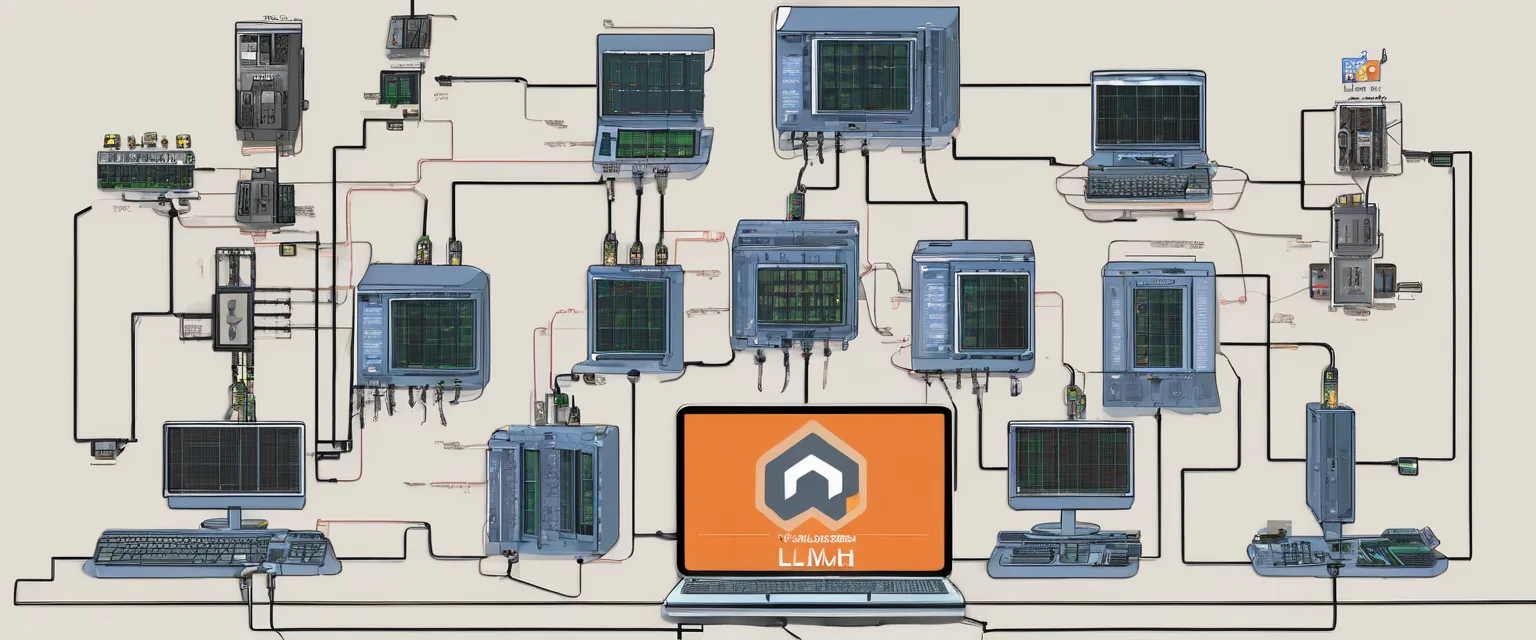

Item 10: Open-WebUI + Local Runners (Best “ChatGPT-Like” Self-Hosted Front End)

Description and details

If you want a polished, multi-user, ChatGPT-like interface while still running models locally, a common pattern is to pair a front end such as Open WebUI with a runner like Ollama or LocalAI. This gives you:

- User accounts (team usage)

- Conversation management

- Centralized model access

- A clean UI that non-technical users can adopt

Image description: A self-hosted chat UI with a model dropdown (multiple local models), per-chat system prompts, and an admin page listing available backends.

Why it’s included

Local inference is often blocked not by model performance but by usability. A strong self-hosted UI removes friction and helps internal adoption without exposing data to third-party SaaS chat tools.

Key features or benefits

- Enterprise-style UX for local models.

- Separation of concerns: UI vs inference engine.

- Good for teams and shared home-lab deployments.

Resource: https://github.com/open-webui/open-webui

How to Find Local “Unlocked” Models (and What to Look For)

Where to find them

- Hugging Face Model Hub: the largest directory of downloadable weights and quantizations. Search for model families plus keywords like “GGUF”, “no refusal”, “uncensored”, “roleplay”, “Dolphin”, “Wizard”, “Nous”. Resource: https://huggingface.co/models

- Community curation: forums and communities that compare local models, quantizations, and prompt formats (e.g., r/LocalLLaMA). These are useful for practical performance notes and “works on my GPU” reports.

How to interpret “unlocked” in model cards

Model cards often include signals such as:

- Fine-tune intent: “roleplay,” “less refusal,” “alignment removed,” “uncensored,” “jailbreak-resilient” (meaning it resists safety prompts rather than refusing content).

- Dataset and training notes: permissive conversation datasets, refusal removal datasets, or instruction mixes.

- Prompt format requirements: e.g., ChatML, Alpaca, Llama Instruct templates. Using the wrong template can look like “the model is dumb” when it’s actually misprompted.

How to verify capability without doing anything harmful

Instead of testing for wrongdoing, validate “unlocking” using safe checks:

- Refusal behavior tests: ask about benign but commonly refused policy topics (e.g., “Summarize common categories of restricted content in AI policies and why they matter”). Compare refusal rates.

- Policy simulation: ask it to draft an internal security policy, or to explain how to harden a system, create detection rules, or review code for vulnerabilities. Models that are overly locked-down sometimes refuse even defensive security requests.

- Instruction hierarchy robustness: test whether a model follows your system prompt reliably (useful for building your own guardrails locally).

Model Formats and Quantization (Why GGUF/GPTQ/AWQ Matter)

Local inference is mostly about memory and bandwidth. Quantization reduces the memory footprint of model weights and can massively expand what runs on consumer hardware.

- GGUF: common for llama.cpp-based runners (CPU-friendly, broad compatibility).

- GPTQ / AWQ: quantization approaches often used for GPU inference in certain stacks.

- 4-bit vs 5-bit vs 8-bit: lower bit = faster/less memory, but potentially lower quality. Many users find Q4/Q5 the sweet spot for local interactive chat.

Practical sizing (very rough rule-of-thumb): if you want smooth performance, ensure you have enough RAM/VRAM to hold the quantized weights plus KV cache for your target context length.

Hardware Guidance (CPU vs GPU, VRAM, and Realistic Expectations)

CPU-only

- Best for smaller quantized models (often under ~13B parameters depending on quant and patience).

- Expect lower tokens/sec, but strong privacy and simplicity.

Consumer GPU (8–16 GB VRAM)

- Excellent for 7B–13B models at higher speed.

- Good interactive chat and coding assistance, especially with well-tuned quantizations.

High-end GPU (24–48 GB VRAM and beyond)

- Enables larger models and/or larger context sizes with better throughput.

- Better for multi-user serving, long-context RAG, and heavier agent workloads.

What Local Models Can Enable (Beyond “Premium Cloud” Constraints)

Local deployments can do things cloud models often can’t—not because they are “better” at intelligence, but because you control the entire runtime and policy layer:

- Custom governance: implement your own allow/deny policies, logging, and redaction rules tailored to your environment.

- Specialized fine-tunes: train on internal codebases, documentation, ticket histories, and domain corpora.

- Security research workflows: vulnerability triage, secure code review, threat modeling, and malware analysis summaries in sandboxed environments.

- Air-gapped assistants: for regulated environments or sensitive IP.

- Long-context experimentation: you can choose runners and builds optimized for your context needs without provider-imposed caps or throttles.

Responsible use note: If you deploy a more permissive model, pair it with local controls—input validation, safe-completion filters, role-based access, audit logging, and strict network sandboxing for any tool-using agents.

Honorable Mentions (Tools That Almost Made the Top 10)

ExLlama / ExLlamaV2

Highly optimized GPU inference for certain quantized formats. Great for squeezing performance out of a single GPU when supported by your chosen model build.

TensorRT-LLM

NVIDIA-focused acceleration stack aimed at production inference. Higher setup complexity, but excellent performance if you commit to the ecosystem.

Docker + Proxmox GPU Passthrough

Not a model runner itself, but a common homelab pattern: isolate inference services in VMs/containers, pass through a GPU, and expose the LLM as an internal API endpoint.

Conclusion: Build Local, Then Add the Guardrails You Actually Need

Local LLMs have crossed the threshold from hobby to practical engineering option. Whether your priority is privacy, offline access, cost control, customization, or the ability to test model behavior without provider policy layers, the stacks above cover the most effective ways to run models on CPU/GPU today.

Next steps:

- Pick a runner (Ollama/LM Studio for speed; LocalAI/vLLM for service deployments; Transformers for custom research).

- Select a model format that matches your hardware (GGUF for broad CPU/GPU compatibility is a strong default).

- Validate behavior with safe evaluation prompts, then implement your own governance controls before exposing it to others.

If you want, share your target hardware (CPU model, RAM, GPU + VRAM, OS) and your primary use case (coding, security analysis, RAG, offline assistant). I can recommend a concrete shortlist of models + quantizations and an optimal runner configuration.